Direct S3 access

Published: Sun 16 August 2020

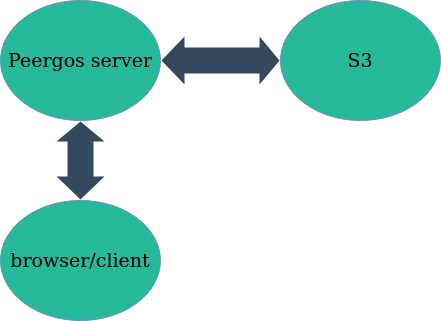

Peergos uses IPFS to store its data and IPFS supports several kinds of blockstores, from various kinds of local disk based storage to an S3 based store. The S3 store is useful because it automatically provides redundancy and availability, and can also hold much more data than any disk. There are also many providers of S3 compatible storage now. For a while now, we've supported using the S3 based blockstore in Peergos. However this wasn't visible to end users, it was only a server side thing. This means that when uploading or downloading data it all had to go through the Peergos server.

The flow of data in previous S3 usage, which proxied through the Peergos server

We have now implemented direct S3 access for reads and writes from the browser/client. Clients can read and write directly to an S3 bucket after authorization from the Peergos server. This new feature makes the Peergos server much more scalable and reliable. The typical bandwidth required of the Peergos server is reduced by ~100x.

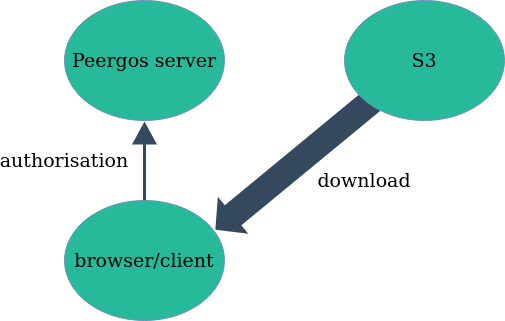

There are two modes of read operation; public and authorised. In public mode, all encrypted blocks can be read directly from S3 without any permission. This is the fastest, but may not suit your pricing model for S3. In authorised mode every read request has to first get permission from the peergos server, which signs a url that is temporarily authorised to read that object from S3. The client then directly downloads the block from S3. This is illustrated below.

Direct S3 reads

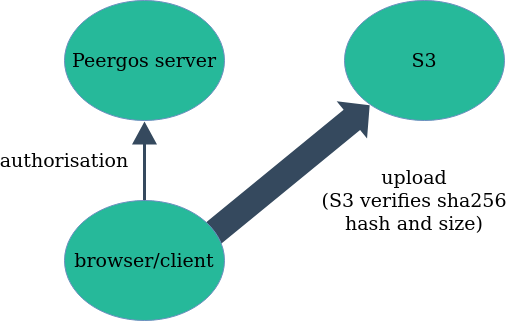

This is all very well, but what about writes? What if there are many users on the same server? This is where it starts to get beautiful... All users on the same Peergos server share the same S3 bucket, and yet there is no possibility for conflict! This is because the blocks in S3 are content addressed! When a client wants to upload a block they first pre-authorise the block hash with the peergos server which returns a signed url the client can use to upload directly to S3. Upon upload, S3 automatically checks the sha256 hash and size of the block with what was signed. The data flow is shown below.

Direct S3 writes

It should be clear how this takes most of the load off of the Peergos server, thus allowing a single server to handle many more users.

The kind of functionality required for this isn't actually exposed by the official S3 SDK. So to get this working we had to implement our own version of the S3 client. It's remarkably simple and only took us a single class, without any dependencies except an hmac-sha256 implementation. The added benefit of this is that we've been able to remove the amazon S3 sdk entirely from our codebase, which was 60mb of dependencies! Compare this with the rest of Peergos including all dependencies, which add up to only 16mb.

Want to be part of the future? Create an account on https://peergos.net or self-host your own private personal datastore.

RECENT POSTS

- 2025 - What a year!

- Decentralization Matters

- Security audit 2024

- Reasons to prefer blake3 over sha256

- A better web

- Markdown browser

- Release the BATs (block level access control in IPFS)

- Encrypted email storage and client

- Decentralized encrypted chat

- Peergos launches decentralized & encrypted social media

- Private and customizable profiles

- Simple decentralized web hosting on Peergos

- Encrypted shareable calendar

- Fast Encrypted File Search

- Private Planning Boards in Peergos

- How to solve the social dilemma and fix social media

- Peergos wins EU Next Generation Internet grant

- Direct S3 access

- Peergos release v0.3.0

- Keybase has left the building

- The perfect social network

- Atomic access control

- Peergos release v0.1.3

- Applications on Peergos

- Fast seeking and encrypted history

- IPFS Camp, new features

- Alpha Release

- Security Audit

- Development update